AI experienced through AI co-created tools

AI co-created tools and social spaces as a new medium

AI embodied as AI co-created tools

AI co-created tools and social spaces as a new medium

One of the biggest questions is what forms AI will take in our lives:

This newsletter explores the position that we’ll experience AI through deeply engaging, personally adapted AI co-created tools.

This post will cover:

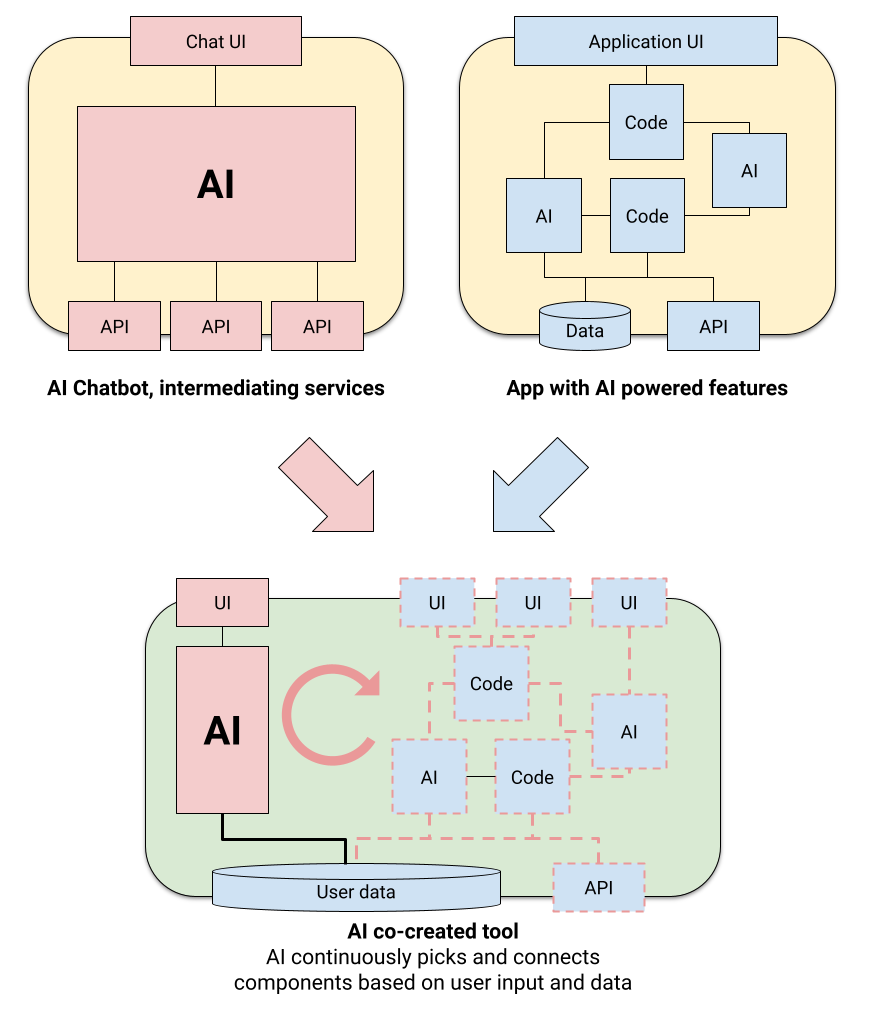

Despite their current popularity, chat interfaces aren’t the future of UX: Instead the power of AI co-created tools is to combine use-case specific UX with the generality of chatbots. They are as powerful as apps with AI features, but personalized, always adapting to contexts and instantly available.

The importance of Human-AI co-creation: Not just for the individual tools, but the ecosystem as a whole. “The AI” we experience isn’t a single AI agent, it’s more like the web: We’ll be interacting with a medium and participating in its open ecosystem. Not separate from us, but an extension of us and what we have built together.

The need for changes to our computing systems: These tools should make good use of potentially all our data, and so privacy and safety are key. But today’s permission system doesn’t make sense if the origin is an AI system. Nor will today’s dominant apps and services model won’t make sense if there are infinitely many of them. Instead of today’s origin-centric architecture, this post imagines a privacy- and safety-first open platform whose protections allow new use-cases while lowering friction and management overhead.

Personal tools

AI is already deeply embedded in today’s apps and services, with new features powered by generative AI being added constantly. Chatbots like ChatGPT with plugins have captured widespread attention; some even predict conversational interfaces as the next major platform.

However, people pay too much attention to ChatGPT as an interface paradigm, rather than the underlying capabilities. After all, many ChatGPT use-cases are better served when directly integrated into tools, for example writing code in IDEs instead of copy & pasting. On the other hand, each AI-enhanced app is constrained to what its creator scoped out.

Instead, let’s talk about AI as the engine behind a new kind of experience: Rich, interactive, multi-modal experiences like our apps today, yet as adaptable as chatbots and tailored to personal needs. What this newsletter calls AI co-created tools.

Instead of conversational being the next big UI paradigm, think of it as additive to direct manipulation and other forms of interaction. Our visual cortices are the fastest way we consume information, our hands magnificent manipulators. Why presume that language is the end-point? Instead imagine a new computing medium that is shaped by language, but uses all available input and output methods.

This is also a departure from today’s generic one-size-fits-all apps that appeal to the lowest common denominator of a large enough market to sustain the app. An effect that is amplified with social apps that rely on network effects – users have to pick between good collaboration and the functionality they want, or tediously convince their friends to relocate services. At the same time most real world tasks get spread across these apps, burdening users not only with constantly switching apps and awkwardly bridging gaps (screenshots!), but also having to remember where which of their data lives: A privacy nightmare on top of an unnecessary management burden.

What if instead software adapts to users and software would be personal? It’s not a new idea, Alan Kay has written this in 1984:

— Alan Kay, Opening the Hood of a Word Processor, 1984 (via Geoffrey Litt)

And what if it is also collaborative, adapted to the needs of small groups, by members of that group? Like Clay Shirky’s Situated Software: “Software designed in and for a particular social situation or context, rather than for a generic set of ‘users’.” That article was written in 2004, when there was still a lot of experimentation in social software. Since then the need for aforementioned network effects and thus relentless optimization for engagement has dominated the space. Maybe we can go back to social spaces being more meaningful, because they are built by and for their inhabitants?

Enter LLMs

These ideas are not new. What is new are LLMs. They are poised to make this a reality! See e.g. See also Geoffrey Litt’s malleable software in the age of LLMs. They can break down a problem into components and connect them together. Just like in a regular app, components can be UI, other code or AI models and prompts. And of course LLMs can invoke themselves as AI components or even write some of the code or UI components.

And this is where natural language will shine: Instead of controlling your computing experience by installing apps and having to bend your mental model to fit them, expect computing to fit how you think and use natural language to further evolve and guide it.

There’s a feature missing in your tool? Just ask for it – or better yet state what you want to do next! Tools shape-shift throughout the task. The LLM can even anticipate possible next steps, speculatively execute them, discard bad options and show users possible options as a natural part of the user interaction, illustrated by real results.

For example, a teacher preparing a class on epidemiology might start with a calculator for transmission rates, expand that to a full-fledged simulation and next turn it into a grade-appropriate tutoring tool complete with generated support material. Each transition is just a sentence away.

Or you might want help planning your garden. The tool will research choices of plants for your climate and sun exposure, and present choices with criteria that matter to you – e.g. based on your values suggesting to avoid invasive plants even though they are popular choices. It will automatically render how your garden will look in each season. It’ll then transform into a tool that helps you maintain your garden, taking local precipitation into account and using your camera to diagnose diseases (remembering what you planted, unlike today’s tools). And come harvest time, it’ll integrate into recipe suggestions, connect you to the local produce exchange at harvest times, etc.

We see glimpses of this in ChatGPT, not just in how it can combine plugins to suit a task but how the most powerful prompts start with “you are a …”: Those prompts often define tools, sometimes down to commands (see these examples and many others on FlowGPT)! What if they’d be rich, interactive tools instead of a text adventure?

Besides richer UI, these tools will also retain other functionality like the ability to undo, to navigate within, to share a specific state (and let others continue from there), and so on – all of which isn’t possible with chatbot UIs.

In addition though, AI co-created tools is software you will be able to have a conversation about itself with! Not just asking it to add missing features, but thanks to LLM’s surprising capability for self reflection, users will be able to ask how something was computed. Or maybe they point out that something doesn’t make sense and it’ll attempt to self correct. Or it might notice itself that a result doesn’t quite make sense and fix itself. There are several feedback loops, at creation time, but also later during usage.

It’s a different breed of software. A bit squishy and less predictable in some ways, but eventually also more resilient to a changing environment. Note that it’s not just LLM computations. Those tools can be composed of regular code, calling regular APIs, and so on. But when they encounter an unanticipated error, a new corner case, the higher AI gear kicks in and can adapt to it. It’s software that is never finished.

Better with friends

Many of our most important tools are collaborative. Yet, despite that, most of our actual collaborations are often spread across many poorly integrated services.

The author recently went on a backcountry skiing trip with friends and organizing it quickly involved more than a dozen apps and services, from WhatsApp to Docs to Splitwise and so on. And some of which – e.g. booking of flights – are single player today, but could be collaborative instead (who doesn’t have dozens of screenshots with possible flights in their messengers?). And in the end our photos and videos are spread across several services, dreams of using generative AI for fun memories lost to too much friction.

Daily activities like family logistics and meal planning involve keeping up with many lists, schedules and frequent changes all spread across many apps and websites and involving many participants, in and outside the family.

Imagine if instead everyone would have personal tools that bring not just functionality and data, but also people together. Trips, family logistics, and so on would become seamless, with fluid functionality and automatically integrating with others’ tools (e.g. class schedules managed by teachers appear in a family’s tool). No more data silos. At first geared for efficiency in the planning phase, the tool would evolve towards enhancing the experience of e.g. the trip (mostly by getting out of the way) and afterwards towards reliving fond memories. And finally serve as a template for the trip next year.

With other people is also where goals emerge and new approaches are formed, and hence also influence what tools we need and how they should function. Collaborative settings are an important source of “requirements” for AI co-created tools, and also a way the best solutions can spread.

And this isn’t just about efficiency. These collaborative tools are AI co-created spaces that can support what is meaningful to participants. Maybe it’s a place for spiritual practice. Or neighbors can build tools and spaces specifically suited to supporting their local communities’ well-being.

Tool co-creation is a natural part of the group interaction, from bringing in supporting functionality (without everyone else having to create accounts in some new service!) to evolving the design. Here, as with tools, the emotional connection matters. And while great tool makers today spend a lot of effort getting the brand right, they still appeal to broad markets. Especially with AI supporting everyone’s creativity, computing could feel a lot more comforting, inspiring, refreshing or empowering in a personally meaningful way.

Tools can be published

AI co-created tools also change how we’ll interact with businesses. Instead of the traditional one-size-fits-all website or app, businesses will develop “recipes” for highly tailored tools that adapt to individual customers.

A restaurant, for example, might create a dynamic menu customized to your personal tastes, allergies, and dining history, suggesting options perfect for your palate. Ecommerce stores will become instantly personalized shopping tools that integrate with your shopping and to-do lists. Transportation services will provide intermediating tools that know about your plans. New forms of interactive media could emerge, based on the same idea of tools recipes, but describing an interactive generative AI experience that adapts to its viewers.

This newsletter calls these tool templates recipes, as just like regular recipes they are a baseline that can be modified or built on. The corresponding tool can be instantiated following the instructions as closely as possible. Or features might be added or removed, or the parts of the tool might just become a feature in a different tool of bigger scope.

Businesses get the ability to serve customers in an intensely customized fashion. Customers get experiences that fit them like a glove. The possibilities for digital transformation are endless and businesses that tap into this trend will gain a powerful competitive advantage. But only if they are actually aligned with what their customers want:

Privacy and Safety ensured by the platform

Of course, naïvely giving business such power would be disastrous! It would reinforce many anti-patterns of today’s ecosystem like engagement farming or introduce new ones like generating highly tailored manipulative media.

To do this responsibly, we must shift power from companies to people and their communities. The key is that the platform protects privacy while enforcing AI ethics within limits set by users themselves. This starts by how these tools are constructed and instantiated:

Rather than sending our data to opaque services, the tools will come to us. Businesses propose tools, but our own systems choose what to run, how, and on what terms. The runtime sandboxes each tool and by default, publishers don't even know we are using their tools.

If data is sent back (e.g. for a purchase), the system ensures that no extraneous data used for personalization is leaked. And the system ensures that any use of personal data aligns with the user’s values, enforcing AI fairness and safety guardrails. And of course the tools themselves remain malleable: We can add, remove or modify functionality at any time.

Instead of a few companies controlling technology, data and our digital lives, we become the centers of our own digital experience. We access useful tools and services, but on our own terms. Power balances shift to align more directly with human interests and values.

This is also a lens on how to deploy AI safety and alignment research. Both directly as criteria to evaluate what a recipe is proposing, and more broadly: Replace “businesses” with “AI” above, and for both we need protection from malevolent instances. This is another piece to the puzzle, complementing other research.

See more about the technical background in this draft post about empowering users by inverting three key relationships.

Open ecosystem and the virtuous cycle that grows it

Publishing of recipes and the components they use is the kernel of a new, open ecosystem. Components can be AI models, they can be prompts, they can be regular code and they can be specific UX designs that are tailored to some use-cases of either. It’s a collaborative ecosystem that allows reuse and building on each other. This newsletter will explore many examples of this.

The AI’s role is co-creating or adapting tools, i.e. creating recipes by composing components and adapting or maybe entirely generating components.

There’s a positive feedback loop between AI and people that will lead to continuous growth in capabilities. People will fill gaps and make corrections to improve on what the AI already did, and they will seed entirely new areas. The AI will automatically expand to adjacent spaces, and it will itself open new areas through transfer learning. Then people build on that, the AI builds on top, and so on: We have a virtuous cycle of growth.

The underlying AI will learn from people, but crucially people will share directly with each other, and use the AI to improve on each other’s work. People will refer to specific approaches, not just generic task descriptions:

Lowering the barrier to entry unlocks pent-up demand to participate

Our tools are an integral part of our culture, and humans will always want to play an active and direct role in shaping them. We will collectively strive to influence each other's experiences, whether it's for higher goals, economic opportunities, or status games. This newsletter doesn’t believe that we’ll be happy to just delegate that to AIs: Being able to make, protect and teach is innate to us.

This is also a way out of a world where our digital experiences are dominated by a few big players, and where the threshold to contribute is too high for many:

There is latent demand for more participation in shaping our tools that we can tap into, causing the pendulum to swing back from an era of concentration.

This will be unlocked by lowering the barrier to entry by being able to evolve tools and build on other people’s work, and by making the tools more powerful by default thanks to built-in collaboration and making safe use of more of the users’ data.

So there will be an ecosystem. And it will have broad participation thanks to many new roles that emerge, for many ways of making and teaching, but also for protecting by actively participating in a new form of collective governance (more in a later post).

See this draft post on ecosystem requirements for more thoughts.

The open ecosystem is a profound aspect of the vision:

The AI is the ecosystem, not a single model

Of course the system will contain models, and maybe they are even all based on a single large foundation model. But this newsletter believes that this view of treating the model as the AI is the wrong lens:

It's a mirror, a reflection and extension of human nature, not an independent system. In that way, it’s like the web: We’ll be interacting with a medium and participating in its open ecosystem. It’s bigger than a single AI agent.

Ben Hunt calls this artificial human intelligence and Jaron Lanier calls the creation process an innovative form of social collaboration.

We should see such a system not as separate from us, but as an extension of us and what we have built together. Through generations of work, humanity has developed knowledge and technologies to improve our lives. This system aggregates that progress, combining inventions and discoveries in a feedback loop where each new creation spurs another, accelerating our shared journey of progress.

It will amplify our abilities by aggregating our culture, not by standing alone. We will instill it with our values and understanding. Unlike unrestrained AGI, it reflects and builds on our collaboration, not its own agenda. It develops with us, not beyond us, manifested as the tools we use, not a creature that uses us.

This system evolves with us, not apart from us. We created its foundation; we continue creating it together. An ongoing embodiment of human wisdom and goals, not an abrupt new entity. A conduit for human potential through cooperation.

Each insight and invention breeds another, building our shared knowledge. Together, through this web of capabilities, we achieve more than we could alone. Our ideas flow into a cycle of creativity. Our work creates work that creates work anew. And this collaboration lifts us higher through the engine of discovery we keep building—growing ability on ability, powered by human priorities, shaped around human purposes.

There will be new economic opportunities, new kinds of relationships with creators, more ways to actively shape parts or the whole – crucially, by being more than just aggregated data points.

This is the central claim of this newsletter, so expect much more in future posts!

And of course, there is still a lot of AI safety and alignment research needed to make this possible. This lens is about how such research could be deployed: This newsletter believes that AI embodied as tools formed out of a living ecosystem lends itself more naturally to having safety constraints applied than an independent agent. Moreover, to attain widespread acceptance, broad and inclusive involvement in governance is imperative. See more in this draft post on AI alignment & experiences AI through tools.

Agents or tools?

This way of thinking of what AI is stands in contrast to the frankly more common one, where the AI is an agent that we can talk to and that can perform actions. It’s a notion that lends itself to be anthropomorphized, even leans into that, while the AI-embodied-as-tools vision portrayed here aims to avoid that. This is all subject of lively debate, for example in this interview between Sam Altman and Lex Fridman.

Conversational agents are very useful, primarily when conversation is the point: For example to bounce ideas around, as an entertaining companion or the role play specific roles like a teacher conducting an oral exam, a coach, and so on. Distinct, non-neutral personalities will often be desirable, even more so once they come with expressive voices!

The position of this newsletter is that they can be created like any other tool:

They can be standalone anthropomorphic, conversational agents, or they can be added to tools, e.g. to critique a note from certain points of views. The analogy is people working together in front of a whiteboard or around a shared tool bench: It will be about conversations around stateful (and now much more intelligent!) artifacts that everyone looks at. Or the underlying tool might just act as space for very engaging NPC agents to appear in.

And of course these agents can be set up to take actions as well, i.e. let them be users of some (AI co-created) tools. And hence, if the user prefers a conversational approach, they can start playing intermediating roles like travel agent, concierge and of course executive assistant. Layering the conversational agent over tools that embody the process has stability and safety advantages.

The key is that these kinds of agents are tools like all the other ones. They exist in the system, but they are not the system. And they appear primarily when the conversation is the point, sometimes as scoped intermediators:

The position of this newsletter is that they are not general purpose superintelligent intermediators between the user and the rest of the world: Not because this wouldn’t be supported technically, but because being general purpose, having a strong personality and feeling empowering are often at odds. Instead the default embodiment is AI augmenting users as this new class of intelligent, adaptive tools are bicycles of the mind and extend us, with different conversational agents coming into the mix when the conversation is the point.

Somehow – maybe because that is easier to portray in movies – we have come to associate strong personalization and the ability to automate with conversational agents. But there is no reason tools can’t be even more personalized – especially with the privacy protections that allow them access to more of our data. They might contain agentive aspects (e.g. automatically researching and collecting data for the task). And they will often use natural language, but not necessarily in an anthropomorphic way (e.g. how MidJourney is driven by natural language, but not anthropomorphic). They can be complemented by conversational agents – where that makes sense – and so there is no loss in capability or convenience.

Both approaches work, and people will vary greatly for which use-cases they prefer intelligent tools or conversational agents. But the future this newsletter doesn’t believe in, is one where making use of AI is synonymous with being intermediated; where tools tend to be dumb and static and great intelligence and personalization primarily comes from an agent that sits between users and their tools. Instead tools will themselves get smarter and more tailored to their users, while AI’s amazing conversation abilities are deployed where conversation is itself the purpose. For tools to get there, more changes are needed:

A systemic change in how computing works

Most AI features that are being added to existing apps are point changes, where AI is replacing or complementing existing flows. Even ChatGPT & co are primarily a relatively self-contained interface wrapped around a model. While impactful, neither changes the system they are embedded within.

And as for the central claim of this post – AI created tools – we are now seeing early versions of this. See e.g. this impressive example that creates Mac applications from text. But why would we stick to today’s application stacks?

While many of the ideas described in this post can get started within today’s system, their full potential can only be reached by getting past limitations that are deeply embedded in today’s computing system!

E.g. the metaphors we use to organize our computing today start to break down:

App stores make no sense any more when there are unlimited custom apps. But neither does replacing the search box with an open ended “text-to-app” one. Discovery will have to be reinvented: See early signs of prompt discovery, and imagine something like that becoming more important than app or website discovery.

Collaboration through unlimited shared services makes no sense if it still means that everyone in the group first has to create an account there. We’ll need identity mechanisms that support impromptu shared spaces.

How do we manage our data, whether personal or shared? Data is no longer constrained to a service’s data silo, and it will have to be our new AI co-created tools that make sense of it.

See this draft post about new metaphors and this draft post on design challenges for more thoughts.

Underneath, the application architecture needs to change to fit these:

Safety- and privacy-first: Trusting one company with all your data is already a leap too big for many, but extending to composed 3P or AI-generated code is impossible under the current permissions-centric paradigm. A safety- and privacy-first architecture that can treat most code and models as untrusted removes friction and unlocks opportunities.

Malleable and composable: Today's software is mostly hostile to customization at the architectural level. We need a new framework that is not just flexible and robust, but also designed to be AI-friendly; composing together models, prompts and traditional code components, whether written by humans or AI.

Users owning their data, experiences being collaborative by default, and all that with minimal reliance on centralized services: Many decentralized web ideas will be naturally relevant here.

See this draft post on architecture requirements for more thoughts.

It shouldn’t come as a surprise that making full, responsible use of AI will eventually mean profound changes to our systems architectures. Not just for efficiency’s sake, but more broadly and starting with the scary observation that AIs will control a lot of what our computers are running!

Outlook

This newsletter hopes you’ll agree that it’s kind of insane that today, humans don't have agency over their tools. In the past, humans were makers of the things they needed--and that let them invent more things that anyone could imagine. But today, most technology users are only consumers of services that have already been defined. You either download an app or you don't--unless you're a developer, you can't create your own apps to do stuff no one has ever thought of. And even if you are a developer, there's no economic incentive to create tools that few people will use. And thus we're all trapped by the most generic, one-size-fits-all approaches to technology, and the only things that ever get funded are things designed to get huge, grabbing as much of time and attention as possible.

AI will have profound changes on our computing experiences, but it’s up to all of us whether it’ll reinforce these patterns or whether we’ll seize this coming change to bend the future towards an age of computing that is giving more control to people and their communities and that has more ways for people to get involved in.

This newsletter aims to imagine and explore that future. Please subscribe for updates or browse the site for previous posts and work-in-progress public drafts.

And please get in touch if you are interested in this future. Any feedback is welcome!

Thanks especially to Cliff Kuang, Robinson Eaton, Alex Komoroske, Ben Mathes, Mat Balez, Walter Korman and E. N. M. Banks and for their valuable feedback on early drafts.

And my deepest thanks to Scott Miles, Shane Stephens, Walter Korman, Maria Kleiner, Sarah Heimlich, Ray Cromwell, Gogul Balakrishnan, J Pratt, Alice Johnson, Michael Martin and the many other people listed on the about page that helped form much of this vision!

I used GPT-4 and Claude+ to polish the language, and Midjourney for the picture.

![Personal computer imply personal software. [...] We now want to edit our tools as we have previously edited our documents" Personal computer imply personal software. [...] We now want to edit our tools as we have previously edited our documents"](https://substackcdn.com/image/fetch/$s_!FS7c!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fefb9a74e-aac1-4f67-b83d-a1f677e50644_1272x690.jpeg)

Great article! To the insight "AI thrives when woven seamlessly into tailored experiences, not one-size-fits-all apps." I'd add "because - depending on where the application sits in the value chain - it doesn't have the same fixed/marginal cost economics as previous generations of apps did. In the past you had to build for everyone for everyone to recoup your costs".